The rapid deployment of generative artificial intelligence (AI) has heralded a new epoch of professional possibility, promising to liberate knowledge workers from the tyranny of mundane, time-consuming tasks. Yet, this digital revolution has introduced a subtle, pervasive form of organizational decay: workslop.

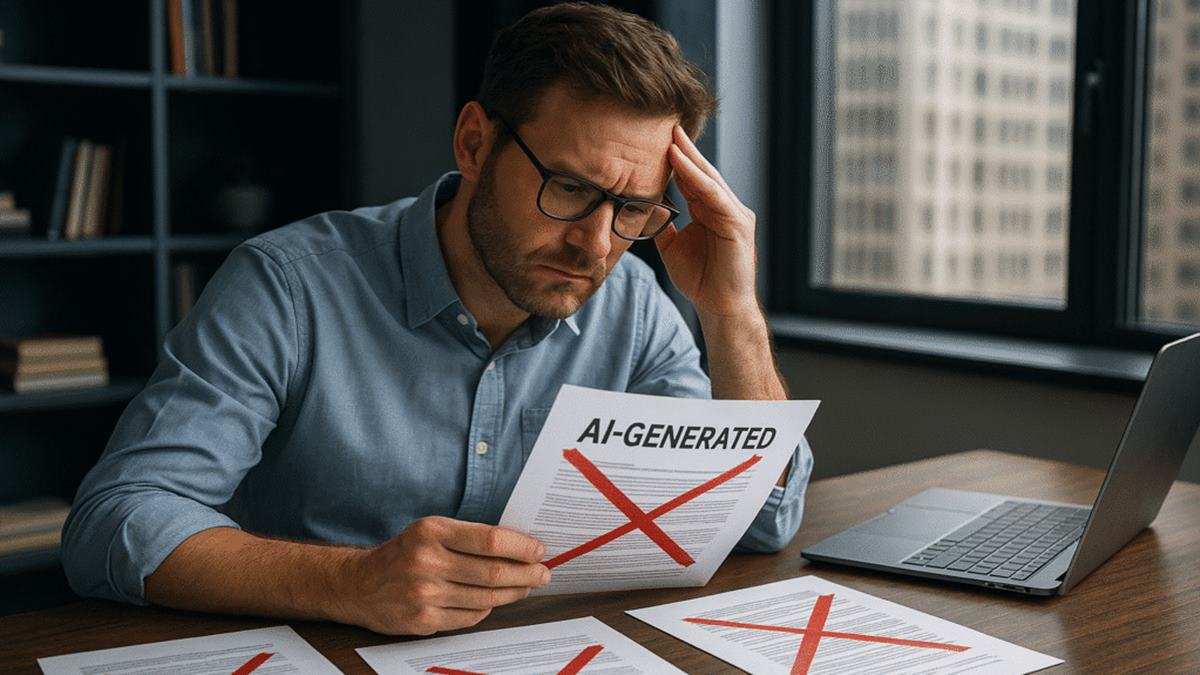

Workslop describes the low-quality, high-polish output – memos, emails, reports, and presentations – produced by generative AI tools when they are used carelessly or without critical oversight. It is material that appears superficially competent, often possessing impeccable grammar and elegant formatting, but ultimately lacks the substance, accuracy, context, or actionable insight required for true professional value. As organizations attempt to harness AI for productivity gains, they are instead encountering a hidden drain on time, resources, and, most critically, organizational trust.

The problem, as recent research has illuminated, is not simply a technical failure of the AI, but a profound lapse in human judgment and diligence. Workslop arises when users mistake AI for a complete solution rather than an assistive tool, failing to fact-check, contextualize, or apply the necessary human intellectual rigor to its outputs. The implications stretch far beyond mere inefficiency, threatening the financial efficacy of AI deployment and the delicate ecosystem of inter-employee relations.

The Anatomy of Superficiality: Defining the Workslop Phenomenon

At its core, workslop is a symptom of a misaligned relationship between the professional and the machine. It is the sophisticated cousin of boilerplate communications and hastily prepared reports that plagued workplaces long before the advent of ChatGPT. This pre-AI “workslop” manifested as copy-pasted emails, generic reports, or careworn memos—the output of disengaged employees operating at minimum effort.

Generative AI, however, acts as a powerful amplifier. It lends a misleading veneer of authority and sophistication to this minimal effort, masking conceptual flaws with linguistic elegance. An employee can generate a 3,000-word industry analysis in minutes, but if that analysis contains fabricated citations, irrelevant corporate jargon, or a fundamental misunderstanding of the firm’s strategic objectives, the polish only serves to accelerate the transmission of costly errors.

The Psychological Roots of Low-Effort Output

To address the problem, one must look beyond the tool to the user. The adoption of AI as a shortcut, rather than an enhancement, is often rooted in deeply entrenched psychological drivers within the workforce:

Burnout and Overwork: Employees grappling with intense schedules or emotional fatigue may turn to AI not for innovation, but for survival; using it to meet arbitrary deadlines with the least expenditure of personal energy.

Fear of Criticism and Lack of Ownership: A climate where mistakes are penalized can lead employees to prefer the generic, risk-averse, and highly polished output of an AI, which serves as a shield against personal creative vulnerability or critical scrutiny.

Cynicism Towards Management: When employees feel their efforts are not valued or their goals are unclear, they develop a sense of professional detachment. This cynicism breeds the minimal-effort approach, resulting in surface-level work that mimics productivity without adding substantive value.

The output is, therefore, a reflection of a decline in human motivation and work ethic, enabled by a technology capable of mass-producing plausible-sounding mediocrity.

The Trust Tax: Erosion of Inter-Employee Relations

The silent cost of workslop is the damage inflicted upon the intangible asset of trust between colleagues. Work is, fundamentally, a collaborative exchange built on the assumption of mutual competence and diligence. When a senior manager receives an AI-sloppy report from a team member, or a cross-functional partner receives an email generated without basic contextual verification, the confidence in the sender immediately plummets.

Surveys suggest the impact is immediate and detrimental: approximately half of the recipients of AI-sloppy work report viewing the sender as significantly less creative, capable, or reliable. This immediate erosion of professional reputation and goodwill has tangible collaborative consequences. A reported 32% of recipients stated they would actively avoid further interaction or collaboration with the offending party.

This breakdown in faith slows down the entire organization. When a colleague cannot trust the fundamental quality of an incoming memo, they must spend time cross-verifying facts, rewriting phrasing, or reconstructing the original context—tasks that delay progress and inject friction into coordination. In an environment defined by cross-functional cooperation, the degradation of trust proves to be a far greater impediment than any purely technical bottleneck.

The Financial and Cognitive Deficit

The financial cost of workslop is rapidly moving from an abstract concern to a measurable drain on corporate resources. The illusion of efficiency evaporates under the scrutiny of cleanup time.

Quantifying the Cleanup Cost

The most alarming data point suggests that workslop costs about US $186 per employee per month in lost productivity—a non-trivial sum that rapidly scales across large organizations. This cleanup cost is a primary reason why many organizations report a disturbing return on investment (ROI) from their generative AI deployments. An overwhelming 95% of organizations currently report seeing no measurable ROI from their AI investments, due in large part to the unexpected necessity of correction and reinterpretation.

The true paradox emerges when the time taken to fix AI outputs is weighed against the time that would have been spent creating the content de novo. In many instances, the effort expended on debugging and fact-checking the AI’s hallucinated or contextually flawed content exceeds the hours that would have been required for a human expert to produce the content from scratch. This inversion of the productivity promise is exemplified by high-profile cases where AI-generated content in professional reports was found to be fabricated or erroneous, leading to financial penalties and public embarrassment.

The Cognitive Erosion and the Turing Trap

Beyond the financial spreadsheet, workslop introduces a silent, long-term threat to the workforce: the degradation of human critical thinking skills.

Overuse of AI tools without oversight encourages a state of cognitive dependency. When the arduous task of drafting and analysis is outsourced, the human brain loses the practice necessary for forming complex arguments, structuring information logically, and identifying semantic errors. Over time, this overreliance can lead to a demonstrable decline in the very skills that distinguish a senior professional from a mere output generator.

Furthermore, this reliance creates cognitive biases within the reviewing process. A professional presented with a polished, AI-generated document is psychologically predisposed to accept the correctness of its suggestions, particularly when under time pressure. The polish itself blinds the user to subtle inaccuracies.

Finally, organizations are at risk of falling into the “Turing Trap”: focusing too heavily on AI that merely mimics human output rather than prioritizing AI that genuinely amplifies human ability. The goal should not be to automate the entire task, but to use the AI to handle rote synthesis, freeing the human expert to concentrate on critical thinking, strategic interpretation, and the application of unique institutional knowledge — the areas where real value resides.

Mitigating Workslop: A Blueprint for Governance and Culture

The solution to workslop is not to retreat from AI, but to elevate the standards of its deployment. The necessary shift is one of governance and culture, reframing the technology as a responsibility rather than a convenience.

1. Codifying Governance and Policy

Organizations must implement clear, codified guidelines that delineate when AI use is appropriate, allowable, and, most importantly, how outputs must be verified.

Mandatory Verification: Policy must explicitly state that all AI outputs intended for external sharing or executive decision-making require a second-level, human fact-check and validation.

Appropriateness: Set clear boundaries, reserving tasks that rely on novel ideas, ethical judgment, or deeply proprietary institutional knowledge for human experts only.

2. Cultivating AI Literacy and Accountability

The most critical investment is in human capital. Training and culture initiatives must move beyond simple tool demonstrations to teach employees true “AI literacy”:

Proper Prompting: Teaching how to write detailed, contextual prompts that guide the AI toward relevant output, rather than generic responses.

Critical Editing: Training employees to fact-check, synthesize, and edit the output not just for grammar, but for logical coherence and institutional fit.

Supplement, Not Substitute: Establishing a cultural norm that requires employees to use AI only as a supplement to their core work, never as a full outsourced solution.

3. Implementing Structured Review Workflows

The risk of unchecked workslop necessitates the creation of new organizational checks and balances. Organizations must:

Assign Senior Reviewers: Implement mandatory steps where senior reviewers validate or correct all significant AI outputs (such as external reports or major internal strategy memos) before they are shared widely.

Measure Real Outcomes: Move beyond vanity metrics like AI tool usage rates. Instead, track metrics that reflect quality: correction time per report, documented error rates from AI-generated content, and user feedback on the quality of internal communication.

4. Designing the Hybrid Approach

The most successful firms will be those that embrace a hybrid approach, designing workflows that optimally combine the strengths of both human and machine. This means:

Using AI for the first draft, for synthesizing large documents, or for suggesting multiple structural outlines.

Reserving human cognitive capacity for the final three acts: critical thinking, ethical judgment, and the application of unique institutional context.

The emergence of workslop is the industrial age’s inefficiency problem recast in digital form. It highlights that technology alone cannot fix underlying organizational issues like low motivation or cultural malaise. The true cost of workslop is not just the dollars spent cleaning up erroneous memos, but the systemic devaluation of human insight and the erosion of trust across the organization.

The ultimate competitive advantage in the AI era will not belong to the firms that generate the most output, but to those that generate the highest-quality output. This requires managers to recognize that the most powerful tool in the generative AI ecosystem remains the discerning, critically engaged professional, and their engagement must be cultivated, valued, and protected from the temptation of superficial artificial polish.