Recent headlines have drawn attention to the emotional connections some users are forming with AI tools like ChatGPT. Most notably OpenAI CEO Sam Altman described what he saw as a “heartbreaking” trend as people felt more supported.

Speaking on a podcast earlier this month, Altman described the newly launched GPT-5 as “less of a yes man”, but also expressed his shock and concern upon receiving feedback from users that they missed the older version, because, as he put it, “I’ve never had anyone in my life be supportive of me. I never had a parent tell me I was doing a good job.”

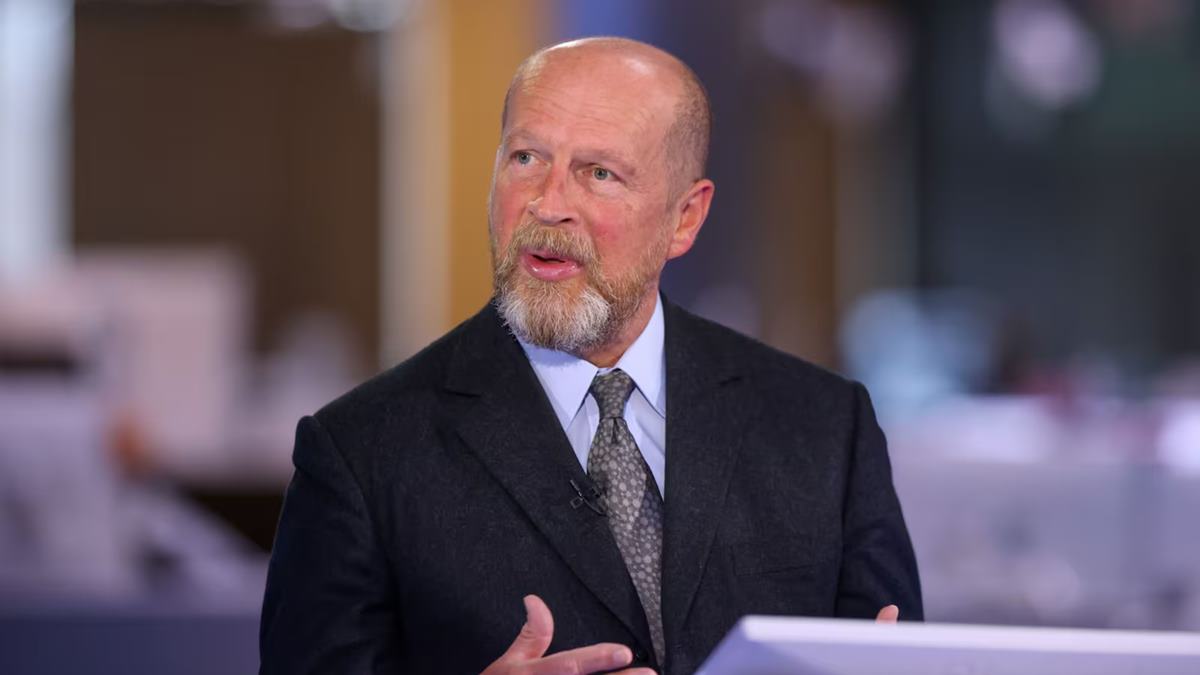

However, Catherine Connelly, human resources professor and Canada research chair in organizational behaviour at McMaster University, urges employers and HR professionals to be cautious when reading these and similar stories.

“My impression is that this is marketing hyperbole,” she says.

“I think people are using these tools widely, but I also think adult professionals know that these are tools.”

Managing AI reliance in the workplace

Altman has acknowledged the scale of influence that comes with even small changes to ChatGPT’s tone, stating, “One researcher can make some small tweak to how ChatGPT talks to you — or talks to everybody — and that’s just an enormous amount of power for one individual making a small tweak to the model personality.”

For Connelly, the real takeaway for employers is about workplace culture, management and communication with employees.

“If any manager or an HR lead in a company notices that an employee is going to ChatGPT for advice instead of their manager or their coworkers, that’s a broader problem,” she says.

“There are certain things you just don’t want to ask ChatGPT to do.”

She emphasizes the importance of a supportive environment and “compassionate” managers who hold lines of communication open for employees before they resort to consulting an AI tool.

“A compassionate manager who has a strong relationship with their direct reports will create an environment where even a new employee feels comfortable talking to them about ‘Hey, how do I pitch this client?’ or ‘I have this problem, what should I do?’” she explains.

“A good manager will be doing that, and they’ll also create a work environment where people ask each other for advice instead of going to ChatGPT.”

Policy, training and the limits of AI

Connelly stresses the need for clear policies and employee education around the limits of AI in the workplace, including as teammates or social connections.

She points out the dangers of overestimating AI’s capabilities, not only for loss of mentorship or connection but as a risk of bad or harmful information.

“With Google or any other search engines, at least you’re seeing the sources where something is coming from,” she explains; with ChatGPT that access to sources – and accuracy – is missing.

“There’s no sources, typically, and so it’s just presented with a great deal of confidence,” she says.

“But there’s no way to evaluate it, and so it should be assumed that it’s not credible.”

Balancing technology and human connection

Connelly believes that while AI can be a helpful tool, it should not replace human relationships in the workplace. She advises a renewed focus on mentorship, with newer or early career employees being encouraged to approach mid- to late-career colleagues for advice and guidance.

“Take a new employee, maybe they don’t know what they need to do in a given situation. They could Google it, or they could ask ChatGPT, and they’ll get an answer. It’s probably not a great answer, and they’ll muddle along,” she says.

“Or, alternatively, they could ask one of their senior colleagues, and they have coffee together, they build that rapport. It’s a stronger relationship … by having that human conversation, you understand a better part of the context. You know what questions you should be asking. You get your answer, but you get more than just the answer.”

This exchange of valuable organizational knowledge can’t be replicated with an AI tool, Connelly stresses, as the deeper context is lost.

“Maybe as part of onboarding, make it clear that asking questions is a normal and expected part of the job, and even add in that ChatGPT will not have good advice for you in a lot of situations,” she says.

“Because they don’t work at this company. They don’t know our clients. Also, they’re AI.”