With artificial intelligence (AI) now an widely accessible cutting-edge tool, many businesses are urging their employees to use the free apps to speed and improve their work. But as IT managers who contributed to a recent Reddit thread pointed out, that casual approach creates considerable risks of strategic data loss for companies, unless they establish clear and effective rules for how and when workers upload content to those less than secure third-party platforms.

Those observations were made in an IT/Managers subreddit thread this month titled, “Copy. Paste. Breach? The Hidden Risks of AI in the Workplace.” In it, participants discussed the initial question of whether employee use of third-party AI apps “without permission, policies, or oversight,” doesn’t risk leading their companies “sleepwalking into a compliance minefield” of potential data theft. The general answer was affirmative, and then some.

“Too late, you’re already there,” said a contributor called Anthropic_Principles, who then referred to other programs, apps, and even hardware that employees use without companies authorizing them as official business tools. “As with all things Shadow IT related. Shadow IT reflects the unmet IT needs in your organization.”

In explaining that, redditors noted a major irony feeding the problem.

It starts with employees needing AI-powered help to transcribe recordings of meetings, or to boil lengthy documents into summaries or emails. But even though widely used business tech like Microsoft’s Teams and Zoom already contain those capabilities, companies frequently deactivate them to prevent confidential information from being stored on outside servers.

Deprived of those, workers often turn to the same alternative AI bots that bosses urged them to use for less sensitive tasks. Most do so unaware that meeting transcriptions and in-house documents stored on those apps’s servers are nowhere near as safe from hackers as they are in highly protected systems of corporate partners like Microsoft–whose tools were deactivated on security grounds in the first place.

“This creates a perfect storm: employees need AI-powered summaries to stay productive, but corporate policies often restrict the very tools that could provide them safely,” notes a recent article in unified communications and tech news site UC Today. “So they turn to the path of least resistance—free, public AI tools that offer no data protection guarantees.”

That’s increasingly resulting in expensive and painful self-inflicted damage to businesses.

A recent survey by online risk management and security specialist Mimecast found the rate of data theft, loss, or leaking arising from employees uploading content to third-party platforms has risen by an average 28 percent per month since 2021. The typical cost to companies victimized by those is around $15 million per incident, part of the total $10.5 trillion in losses from global cyberattacks forecast this year.

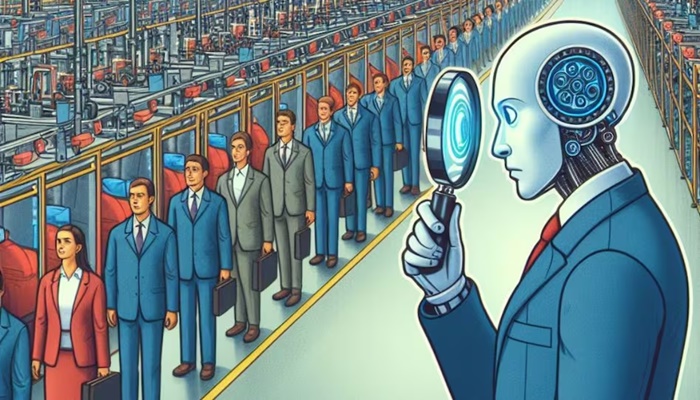

How can businesses escape that circular trap? Contributors to the Reddit thread, and UC Today have some suggestions. They’re are based on assumptions that employees must and will use AI-driven tools, but that their companies need to ensure they do so safely.

For starters, they say, businesses should approve use of AI applications integrated into programs like Teams or 365 Copilot that they already used for other tasks, and whose security protections are far stronger than freestanding online apps. From there, UC Today recommends:

- Auditing current AI usage across your company by all levels of employees

- Using that to develop clear AI rules that balance security requirements with user productivity objectives

- Educating and training employees on the benefits AI can offer them, as well as the risks it represents in various use cases

- And finally, monitoring and enforcing those policies with security tools or in-house IT managers to ensure compliance, and to detect any accidental or intentional corner-cutting by staff.

“This is not an IT issue at its heart,” noted subredditor Sad-Contract9994, saying it is more about broader data loss prevention (DLP) efforts. “This is a governance and DLP issue. There should be company policy on when and where company data can be exfiltrated off your systems—to any service at all.”